Listen to Jerry’s discussion here

AI does deliver

Researchers into Artificial intelligence (AI) have celebrated a string of successes with neural networks and computer programs that roughly mimic how our brains are organized. There is little doubt that Artificial Intelligence enhances the speed, precision, and effectiveness of human efforts. As it grows more amazing by the month, embedded all around us, it’s an invaluable partner which is much more reliable for memory, specific detail and a particular connection with reasoning power.

I am interested in the large language models (LLMs). There is even a possibility that its kind of assisted intelligence could become a tangible tool for generating creative ideas out of known data. It might offer an extension of the creative tools that are already part of Effective Intelligence (EI).

Trained on human-generated data, the AI large language models are very adept at noticing patterns in such data when presented to it in the limited way it can currently use it. But learning from existing written material – even massive quantities of it – might not be enough for AI to come up with solutions to problems that we have never encountered before, though this is something that children, with their amazing human intelligence, can do very early on. Just watch a two year old…

How it works

AI relies on predictability based on statistical analysis, made at extreme speed, of enormous quantities of knowledge which gives it access to conclusions beyond the practical reach of the human being. It is supposed to work ’intelligently’, for example by finding patterns in vast amounts of information. It is hoped that it is more consistent than human judgements, through its algorithmic decision-making. A ‘recurrent neural network’ learns from its own output: its predictions feed back into the network to improve future performance.

An algorithm is a journey, a step by step process to resolve a particular kind of problem. It is trying to work like a substitute for a brain and is becoming closer, so it will be critical to know what AI and the brain share in common and what they do not.

AI cannot be a brain because it doesn’t have a human body. The AI ‘black box’ consists of systems of complex computerised processes that create a solution. Its machine learning assumes that these processes can learn from analysis of their own data to find significant patterns that lead to decisions without people getting in the way. But no one can tell how those black box decisions were reached.

This presents a huge risk. The human instinct is for decisions based on transparent ‘rules’. People need the opportunity to give feedback to AI in order to subject its processes to shrewd questioning. Is that open to us all? We can only question what it produces – its results. So we MUST do that with our own intelligence. What thinking tools do you have to help you? This is where Effective Intelligence thinking tools can come to your assistance.

Pitfalls and consequences

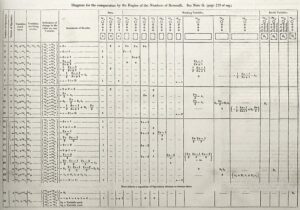

Even as early as 1843, Ada Lovelace* wondered about limits to what an analytical engine could do. And it has been said that “AI is not as good as you hoped, though not as bad as you fear”.

Simply put, AI has no common sense, and is “incredibly smart and shockingly stupid.” It is a superpower with a very narrow scope, rather like an insect that can do just certain things exceptionally well. It can do one thing well but not adapt this to any other tasks, so it lacks the ‘general’ intelligence of our brains.

It’s people who create flawed algorithms. “As AI increases in complexity, some models (based on statistical probabilities) reveal new biases and inaccuracies in their responses” according to Stephen Ornes in “The Unpredictable Abilities Emerging From Large AI Models” (Quanta March 16th 2023). Google’s Bard is 100,000 times the speed of a brain, but the machine is not sentient, so not aware of what it’s delivering. This places enormous demands on the purity of the algorithmic rules, which are either given to them by human beings, or ones that they derived by themselves from the examples we gave them. It’s we who create the flawed algorithms, often based on averages. Whereas it may be better for some cases to be handled by an individual person.

People may also suffer from so-called ‘AI hallucinations’ which, in the absence of rigorous auditing, cause false or misleading interpretations and advice. Notoriously, AI tools for recruitment have been unreliable or even biased, for example from facial analysis. Fake precedents have been submitted which have misled the courts and even resulted in miscarriages of justice. Large language models (LLMs) — the technology that powers chatbots like ChatGPT — might be able to write a poem or an essay on demand, but they are also infamous for this hallucinating and are already known even to make things up with false facts.

For a shopping website, based on the behaviour of people in a target market, AI will make analyses a million times better than any human could, says Ken Cassar, CEO of London-based AI startup Umnai. “If the model is 85% accurate, that’s brilliant — a paradigm shift better than humans could do at scale. But if you’re landing aeroplanes, 85% is no good at all.”

There are other pitfalls, such as flawed algorithms for medicine or spotting benefit fraud.

With technology evolving at an astonishing rate and the human element being minimised, there is real potential for honest and even tragic mistakes. AI predictions and results need checking by the questioning skills of Effective Intelligence.

Effective Intelligence offers needed quality assurance

Through 50 years of research, development and practical application across five continents, Effective Intelligence (EI) has honed core questions which apply generically like these samples shown below.

Here are questions you can raise when you want to:

- know enough about the right issue to tackle.

“Are there enough relevant and accurate facts?” - think up more solutions, especially for what seems ‘impossible’

“Were exceptional ideas explored enough?” - persuade others to agree with your proposal.

“Have their objections been considered in advance?” - think everything through to a sound outcome.

“Does the solution work and also support our strategy?”

These four prepositions, about, up, with and through, are magic keys for resolving problems, especially when dealing with problems in territory entirely new to you. Then you need the skills of ‘questioning in ignorance’.

Questioning in ignorance

It is actually possible to ask shrewd questions without knowing anything about the subject. Just as you can observe people stupidly asking questions that have no value at all for the situation in hand there are, in contrast, some questions that always deserve and have the right to be asked for particular thinking tasks.

So first it is vital to identify the kind of situation being faced. The thought maps of Effective Intelligence help you do this as well as suggesting the most useful and relevant kinds of questions to marshal.

Of course this means that you can even assist and evaluate someone else’s decision without much knowledge of the situation. You can certainly see the thinking required for a particular task, and can even gain insight into someone else’s brain.

Partnership is ideal

Experts are of 2 kinds:

- those with huge amounts of data knowledge

- and those with the process skills to ask the relevant questions to reach any knowledge they don’t have

Those with process skills travel light and fast – ideal for a top business consultant or senior manager or director. While AI is excellent for managing data efficiently, human process offers more scope for both strategic and subtle aspects of a task.

I think the ideal is a partnership between AI and EI. Take medical diagnosis as an example of cases where it will be known whether you were right or wrong, such as radiology, pathology and oncology. Soon, the process will be entirely digital. Most patients don’t want their diagnosis from a machine but from someone who has empathy and human understanding. We could let the computers take the lead on judgements, predictions and diagnoses, and then let people lead when there is doubt, or if others need to be convinced or persuaded. On the one hand, the patient wants to hear it from a compassionate person who can help them understand and accept the news. On the other hand, medical professionals, who can form interpersonal connections and tap into social drives, stand a better chance of getting patients to understand and follow the proposed treatment.

Looking forward with our own development of Effective Intelligence, an online participant using EI could be encouraged to treat AI as an expert tutor, who can be dropped as soon as s/he gets back into their stride, and picked up again if they lose confidence in knowing the best way to tackle the task now. This might offer a tangible extension of the creative tools, instruments, maps and conceptual questioning patterns that are already part of Effective Intelligence.

Action to take

If you have not already found out your own thinking Profile, you can go to See inside YOUR thinking

* Ada Lovelace https://en.wikipedia.org/wiki/Ada_Lovelace